Fusion Quill AI 4.2

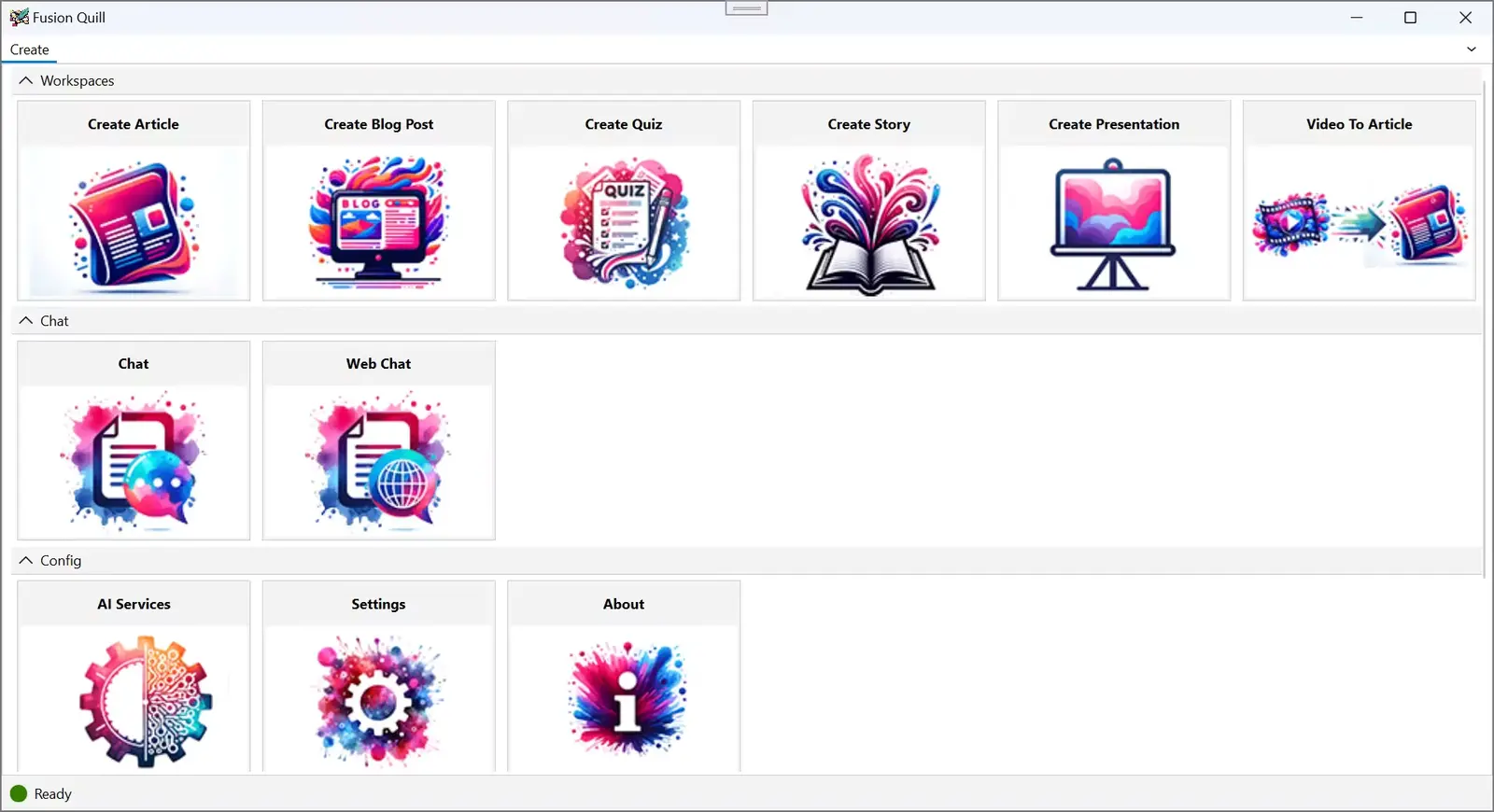

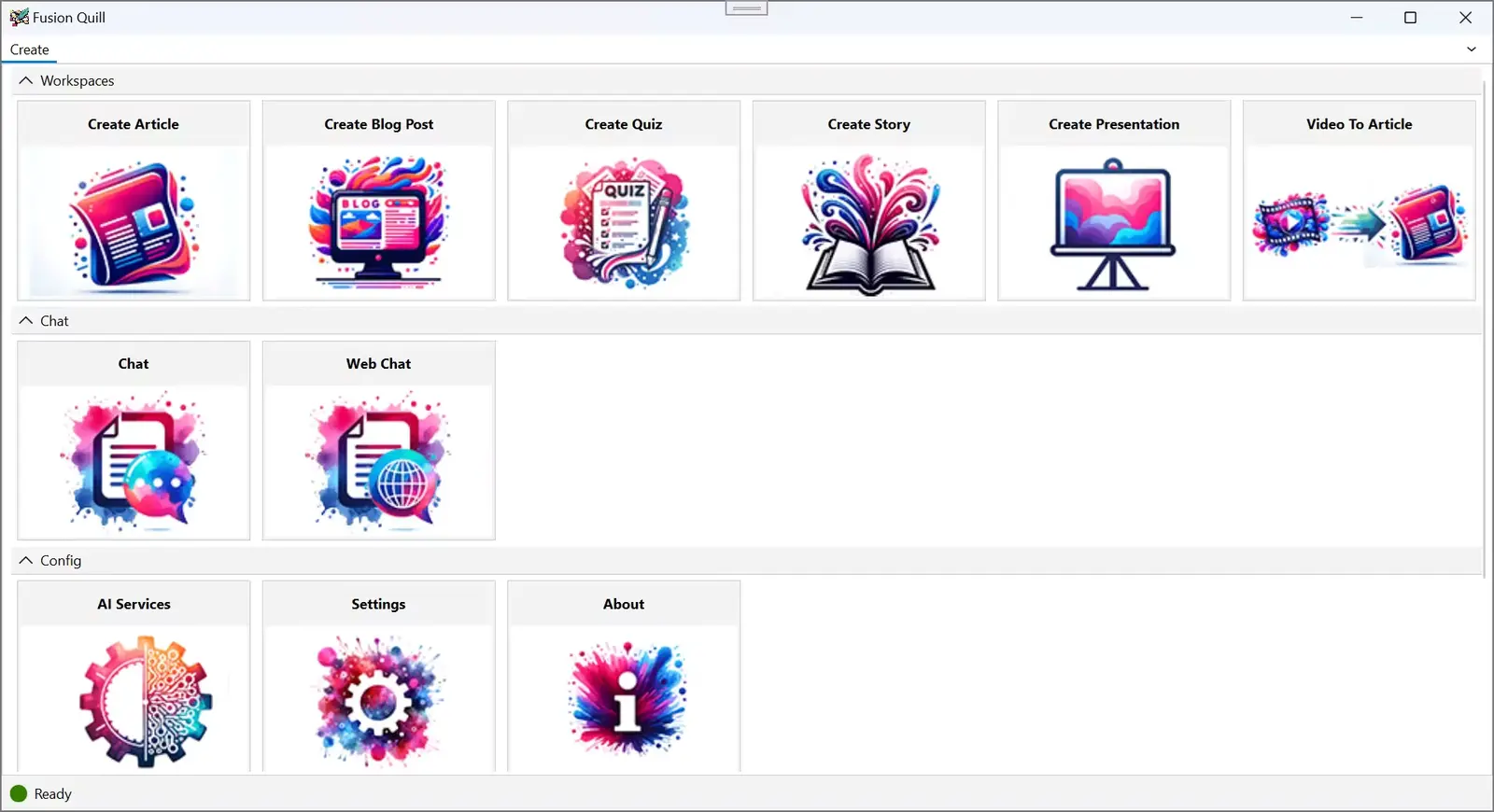

Securely Integrating AI, User Experience, Workflow with your Data. Imagine Fusion Quill! – Your AI Wizard handling mundane work! Fusion Quill makes AI accessible to everyone, eliminating the need for complex prompting. Experience the future of AI interaction with Fusion Quill's intuitive Wizard Workflows, AI Word Processor and Chat User Interfaces.

Bringing Together AI, User Experience, Workflow, and Your Data

At Fusion Quill, we believe in the power of AI to transform the workplace, making it more efficient, innovative, and inclusive. For AI to truly make an impact, it needs to seamlessly blend into the daily workflows of information workers like you.

Our Solution: We use User Experience Metaphors that information workers are used to and integrate them with AI, Workflows and your Data .

Accessibility

No specialized AI Prompting knowledge required.

BYOAI

Connect to Any AI model. No Lock-in!

Integration

By connecting AI with your existing data and workflows, we empower you to achieve more without overhauling your processes.

Empowerment

Our goal is to demystify AI and put its power into your hands, ensuring you can leverage it to enhance your work, not replace it.

Fusion Quill connects to Local Models and API models.

- Local Models – GGUF Models from Hugging Face.

- API Models – Open AI, Azure AI, Google Gemini, Amazon Bedrock, Groq, Ollama, Hugging Face, vLLM, llama.cpp, etc.

- AI Workflows

- AI Word processor

- Chat with AI

Release Notes

Basic Requirements

- Windows 11 or 10 PC with a Internet connection

- 16GB RAM

Requirements for Local Inference

- Windows 11 (preferably manufactured in the last 2 years)

- Nvidia or AMD GPU preferred with updated GPU Driver.

- CUDA 11 or 12 installation for faster AI on Nvidia GPUs for Local LLMs.

- 32 GB Memory (Text Generation works with16GB but is slow)

- 12 GB min disk space, 24+ GB recommended.

- First time AI generation is slow due to Model loading time

- Fast internet connection is recommended to download huge AI models ( > 50 Mbps)

* For a truly local experience, download the local AI model (GGUF and Onnx models). Ensure your system is ready with adequate disk space and a high-speed internet connection for a smooth setup. For optimal Local AI performance, we recommend using a NVIDIA RTX 3000 Series GPU (or AMD equivalent) combined with an Intel/AMD 12th+ generation CPU and at least 32 MB of RAM.

Languages: English

File Size: 201 MB

Download

*

Securely Integrating AI, User Experience, Workflow with your Data. Imagine Fusion Quill! – Your AI Wizard handling mundane work! Fusion Quill makes AI accessible to everyone, eliminating the need for complex prompting. Experience the future of AI interaction with Fusion Quill's intuitive Wizard Workflows, AI Word Processor and Chat User Interfaces.

Bringing Together AI, User Experience, Workflow, and Your Data

At Fusion Quill, we believe in the power of AI to transform the workplace, making it more efficient, innovative, and inclusive. For AI to truly make an impact, it needs to seamlessly blend into the daily workflows of information workers like you.

Our Solution: We use User Experience Metaphors that information workers are used to and integrate them with AI, Workflows and your Data .

Accessibility

No specialized AI Prompting knowledge required.

BYOAI

Connect to Any AI model. No Lock-in!

Integration

By connecting AI with your existing data and workflows, we empower you to achieve more without overhauling your processes.

Empowerment

Our goal is to demystify AI and put its power into your hands, ensuring you can leverage it to enhance your work, not replace it.

Fusion Quill connects to Local Models and API models.

- Local Models – GGUF Models from Hugging Face.

- API Models – Open AI, Azure AI, Google Gemini, Amazon Bedrock, Groq, Ollama, Hugging Face, vLLM, llama.cpp, etc.

- AI Workflows

- AI Word processor

- Chat with AI

Release Notes

Basic Requirements

- Windows 11 or 10 PC with a Internet connection

- 16GB RAM

Requirements for Local Inference

- Windows 11 (preferably manufactured in the last 2 years)

- Nvidia or AMD GPU preferred with updated GPU Driver.

- CUDA 11 or 12 installation for faster AI on Nvidia GPUs for Local LLMs.

- 32 GB Memory (Text Generation works with16GB but is slow)

- 12 GB min disk space, 24+ GB recommended.

- First time AI generation is slow due to Model loading time

- Fast internet connection is recommended to download huge AI models ( > 50 Mbps)

* For a truly local experience, download the local AI model (GGUF and Onnx models). Ensure your system is ready with adequate disk space and a high-speed internet connection for a smooth setup. For optimal Local AI performance, we recommend using a NVIDIA RTX 3000 Series GPU (or AMD equivalent) combined with an Intel/AMD 12th+ generation CPU and at least 32 MB of RAM.

Languages: English

File Size: 201 MB

Download

*